A New AI Coding Challenge Reveals Surprising Results! 🤖💻

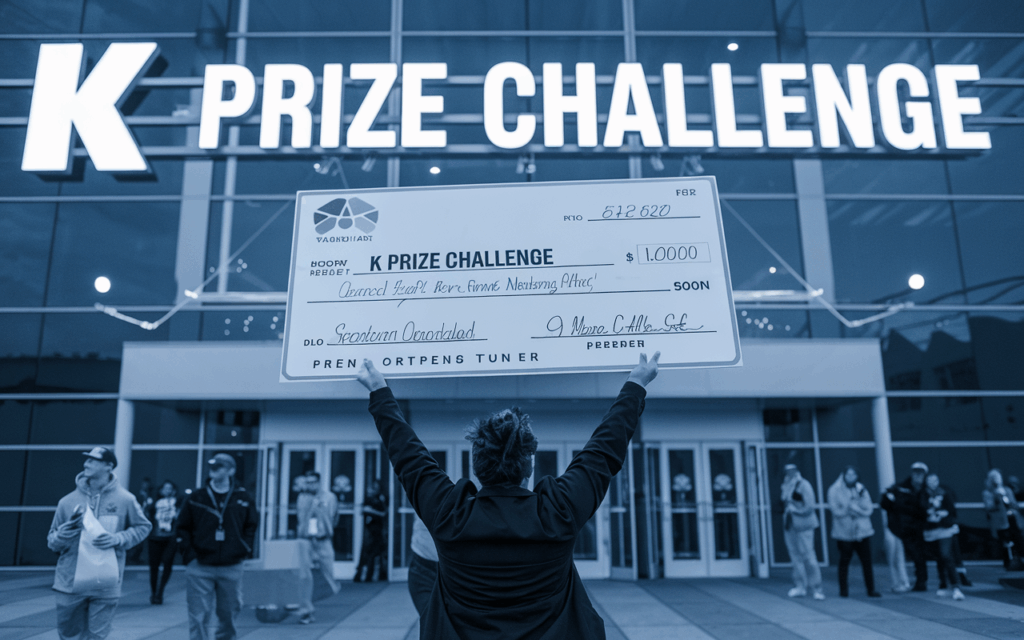

Recently, the nonprofit Laude Institute made headlines by announcing the winner of the highly anticipated K Prize, a multi-round AI coding challenge. This contest, co-founded by Databricks and Perplexity’s Andy Konwinski, aimed to set a new benchmark in evaluating AI's coding capabilities. 🎉

Who's the Winner? 🏆

The accolade goes to Brazilian prompt engineer Eduardo Rocha de Andrade, who not only walked away with a cool $50,000 but did so with an astonishingly low success rate: just 7.5% correct answers! 😲 This may come as a shock amidst a landscape filled with sophisticated AI tools, but it sends a crucial message—evaluating AI isn't as straightforward as one might think.

As Konwinski aptly stated, “We’re glad we built a benchmark that is actually hard.” Indeed, the K Prize is not just a contest; it's a corrective measure against increasingly easy benchmarks that offer little insight into AI capabilities. His commitment to ensuring that future open-source models can outperform this test is both refreshing and much needed! Here’s hoping for a robust competition—there's a generous $1 million on the line for the first model to score 90% or above. 💰

What Makes the K Prize Unique? ✨

Unlike existing benchmarks like SWE-Bench, which can be susceptible to data contamination, the K Prize has introduced a "contamination-free" approach. Entries were submitted with only issues flagged from GitHub after a specific cut-off date. This method not only prevents competitors from simply training their models on known problems, but it also levels the playing field for smaller AI models. 🛠️

The stark contrast in scoring between K Prize and other benchmarks reflects the challenges developers face. While SWE-Bench shows much higher success rates, the K Prize highlights significant barriers that still exist in the field of coding AI.

The Implications Moving Forward 📈

With AI being touted for its potential to revolutionize industries—including healthcare and law—it’s crucial we heed the implications of these results. Konwinski's comments concerning the K Prize serve as a reality check— current AI tools need improvement before we can truly trust them for critical tasks. If the best AI can only manage a few correctly answered questions, we need to re-evaluate the narrative surrounding AI's readiness for real-world applications.

As Princeton researcher Sayash Kapoor rightly points out, building new tests is pivotal for understanding the capabilities and limitations of AI today. Only through rigorous evaluation can we ascertain the actual abilities of AI models compared to the hype surrounding them.

Your Thoughts? 💭

What do you think about the results of the K Prize and its implications for the future of AI in software engineering? Are we facing a reality check on the capabilities of AI that too many have taken for granted? Let’s hear your thoughts in the comments below!

Stay tuned for more updates on AI developments, and be sure to share this post with fellow tech enthusiasts! 🚀

Hashtags

#AICodingChallenge #TechTrends

More Stories

Meta’s AR Ambitions and AI Safety: Insights from the Equity Podcast

Insight Partners Data Breach: A Wake-Up Call for Cybersecurity Awareness

Lovable’s Ascendancy: Anton Osika at TechCrunch Disrupt 2025