Opening the Black Box: Anthropic's Bold Mission for AI Interpretability 🔍✨

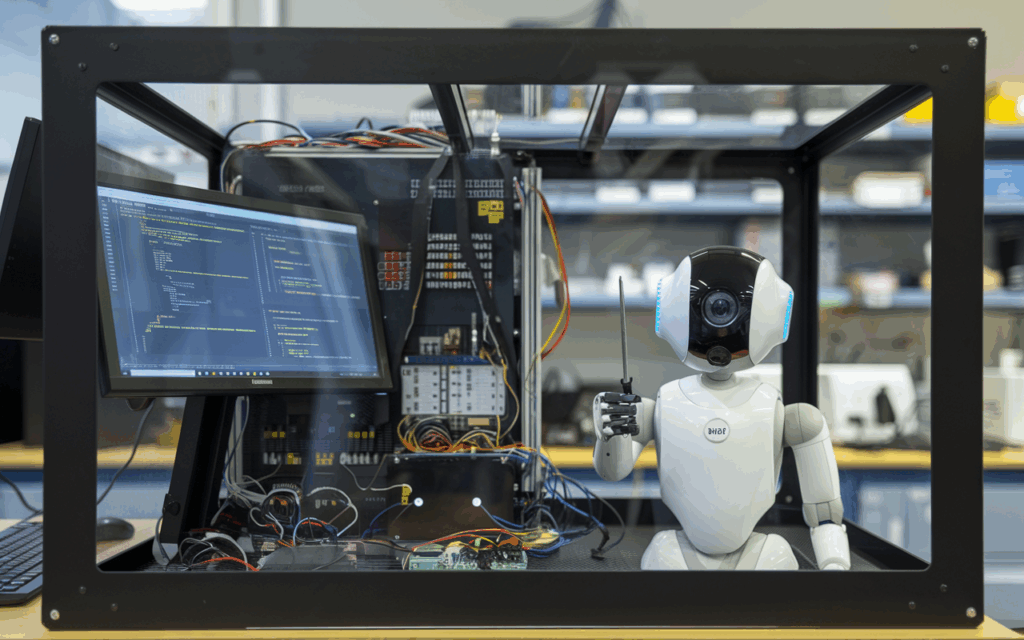

In the rapidly evolving landscape of artificial intelligence, a pressing question looms large: how do these sophisticated AI models really work? 🤖💭 Dario Amodei, co-founder and CEO of Anthropic, recently shed light on this pivotal issue in his insightful essay, "The Urgency of Interpretability." His vision? To decipher the complexities of AI by 2027, making these models not just powerful, but also understandable.

The Current State of AI 🔧⚙️

Despite phenomenal advancements, the inner workings of leading AI models remain largely mysterious. For instance, OpenAI's recent reasoning models demonstrate greater performance but also exhibit a troubling increase in erroneous outputs—a phenomenon, as Amodei highlights, we have yet to comprehend fully. "When a generative AI system does something, like summarize a financial document, we have no idea, at a specific or precise level, why it makes the choices it does," he states emphatically.

This concept of AI as a "black box" challenges the foundations of trust and safety. Essentially, if we deploy AI systems that can operate with significant autonomy, shouldn't we thoroughly understand their decision-making processes? 🌍🤔

Interpretability: A Path Forward 🚀📈

Anthropic's mission is to pioneer mechanistic interpretability—a domain focused on uncovering how AI models reach their conclusions. Amodei envisions a future where AI models undergo "brain scans" to identify inherent biases or errors—much like MRIs assess human health. This ambitious initiative could take a decade to realize but is critical for safely leveraging AI's potential. 🧠✨

Interestingly, Amodei's article isn't just a research proposal; it's a call to action for the tech community and regulators alike. His plea resonates: AI giants like OpenAI and Google DeepMind must enhance their interpretability research and collectively foster an environment where understanding AI is prioritized over mere capability expansion. 🏢💪

A Collaborative Approach to AI Safety 🤝🛡️

Anthropic distinguishes itself by championing safety. Unlike others in the tech sphere, it supports thoughtful regulations that mandate transparency in AI safety practices. Amodei’s push for "light-touch" regulations exemplifies a proactive approach to ensure that this technology is developed responsibly, minimizing risks of misuse or oversight in AI deployment.

In today's fast-paced tech landscape, collaboration is crucial. As Amodei states, "Beyond the friendly nudge," cooperation among industry leaders is essential to delve deeper into AI interpretability. The repercussions of outpacing our understanding of AI could be monumental, impacting economies and national security across the globe. 🌐🛡️

Conclusion: The Future of AI is Bright 🌟🔮

In conclusion, the road ahead for AI interpretability is not just a technical challenge—it’s a societal imperative. 🔗 Addressing this issue opens doors to a safer and more transparent AI landscape, fostering innovation while minimizing risks. As we see major companies like Anthropic pushing for this understanding, the future of AI appears brighter, provided we heed the lessons from past mistakes and prioritize human oversight alongside technological advancement.

For more details on Dario Amodei's vision and the strides made by Anthropic, check out the full discussion here.

Let's keep the conversation going! How do you feel about the future of AI and interpretability? Drop your thoughts below! 💬👇

#AI #MachineLearning #Anthropic #AIsafety

More Stories

Meta’s AR Ambitions and AI Safety: Insights from the Equity Podcast

Insight Partners Data Breach: A Wake-Up Call for Cybersecurity Awareness

Lovable’s Ascendancy: Anton Osika at TechCrunch Disrupt 2025