Google’s New Emotion-Detecting AI: A Double-Edged Sword? 🤖

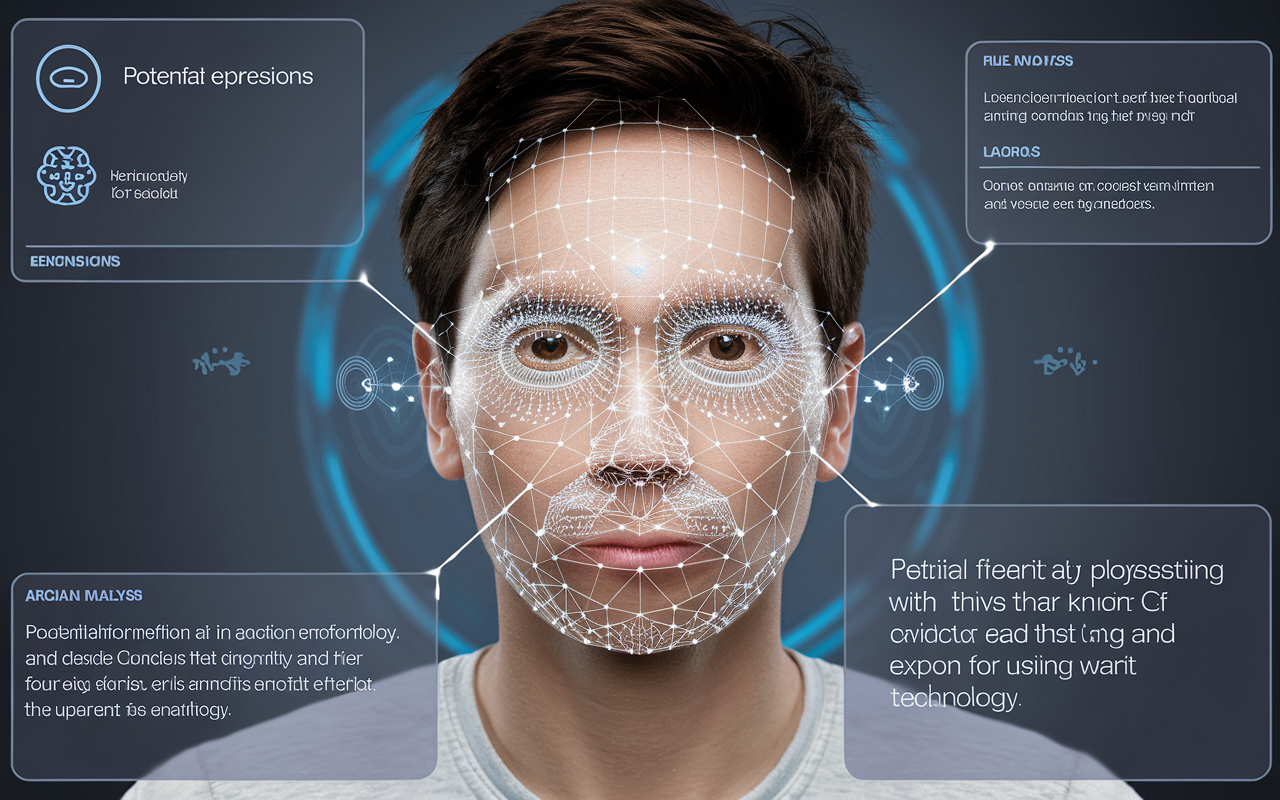

In a recent announcement that has sent waves through the tech community, Google unveiled its latest venture into artificial intelligence – the PaliGemma 2. This new family of AI models not only analyzes images but claims to be able to “identify” emotions hidden within them. While this sounds impressive on the surface, experts are raising serious concerns about the implications of such technology. 😟

The Innovation Behind PaliGemma 2 💡

Launched on December 5, 2024, PaliGemma 2 marks a notable stride in AI development by offering detailed, contextually relevant captions for images and the ability to answer questions about the people depicted in them. Google emphasized that this model looks beyond mere object identification, delving into interpreting actions and emotions. However, it’s important to note that this isn’t a straightforward feature. Emotion recognition requires careful fine-tuning, and the accuracy of such systems is already under scrutiny.

Experts Weigh In: Alarm Bells Ringing 🔔

As highlighted by Sandra Wachter, a professor of data ethics and AI at the Oxford Internet Institute, the ability to read emotions is fraught with complications. “It’s like asking a Magic 8 Ball for advice,” she remarked, echoing the concerns of many in the field. The general idea that machines can accurately interpret human feelings raises ethical questions about consent, privacy, and autonomy.

The emotional spectrum is intricate and often influenced by personal and cultural contexts, which AI may struggle to grasp. Mike Cook, a research fellow specializing in AI, pointed out that while AI might catch some “generic signifiers,” it can never fully decode human emotions. This limitation inevitably leads to a lack of reliability and bias — which can manifest in dangerous ways.

The Risks of Emotion Detection Systems ⚠️

Numerous studies, including one from MIT, have demonstrated that AI models trained on biased datasets tend to favor certain expressions while misinterpreting others. In fact, some systems have been shown to inaccurately assign negative emotions to faces based on race, which could worsen existing societal inequalities.

Despite Google’s claims of having conducted "extensive testing" to evaluate demographic biases in PaliGemma 2, skepticism remains high. Critics argue that the benchmarks used for comparison don’t adequately capture the diversity of human emotional expression. Moreover, there’s a rising fear that open-access models like PaliGemma 2 could be misused in high-risk contexts such as law enforcement and employment, leading to further discrimination.

Looking Ahead: Responsible Innovation Needed 🔍

As the debate unfolds, the question remains: how do we ensure that advancements in AI don’t lead us down a dystopian path where job opportunities and social interactions hinge on algorithmic interpretations of our emotions? Sandra Wachter insists that responsible innovation requires a serious commitment to thinking about potential consequences throughout the entire lifecycle of the product.

While PaliGemma 2 shines a spotlight on the rapidly evolving field of AI, its rollout must be approached with caution. There’s an urgent need for robust regulations that can protect against the misuse of such technology while fostering genuine innovation. 🌍

If you'd like to read more on this topic, check out the full article on TechCrunch 📖.

Feel free to share your thoughts! Do you think AI models like PaliGemma 2 can bring positive change, or do potential risks outweigh benefits? 🤔

#AI #EmotionDetection #EthicsInAI

More Stories

Meta’s AR Ambitions and AI Safety: Insights from the Equity Podcast

Insight Partners Data Breach: A Wake-Up Call for Cybersecurity Awareness

Lovable’s Ascendancy: Anton Osika at TechCrunch Disrupt 2025